Weighted Interval Scheduling

Sample Problem

Some time ago when working on student and course management system for my client I needed to find out how many teaching periods are there withing a given date range.

Colleges and universities have different teaching periods types e.g. semesters, trimesters, quarters, summer terms of various length. So, with a date range given, what is the maximum study duration of non-overlapping terms within that range? That’s if our aim was to spend as much time at school as possible (consider we suddenly went crazy) what teaching periods should we consider enrolling in.

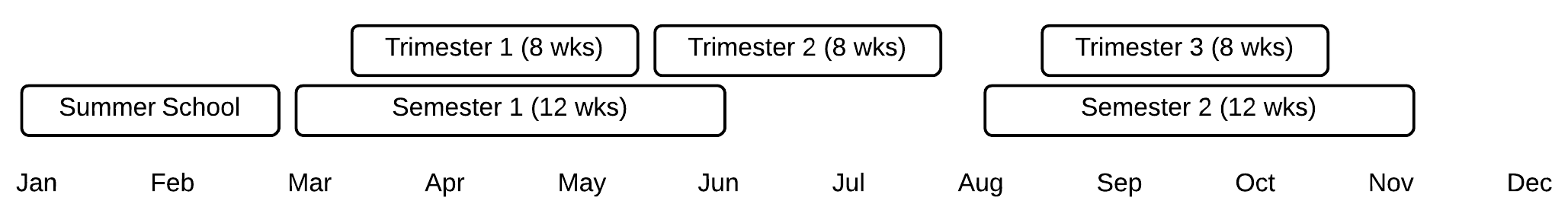

Here is sample set of available teaching periods:

Sounds like a simple quest! Take your time to figure it out what algorithm or probably heuristics would you employ?

Summer school in January??

I am sure that those who spotted that Summer School starts in January, already figured out what's going on. If not, then the explantion is trivial: I work for an Australian education provider, and there Summer is from December to February.

If you are new to the problem, chances that you would try some sort of heuristic in search for the greedy algorithm. For example you might opt for selecting whatever interval starts the earliest (Summer School in our case).

And if it wasn’t for weights (i.e. teaching periods have different durations and thus are weighted) then I bet you would definitely find such an algorithm. But it takes a bit of more involved process in case of weighted intervals.

The good news: this problem has an elegant and very efficient solution which is not hard to comprehend and implement.

Proper Problem Statement

While we definitely know what we are looking for, let’s start by redefining our problem more precisely. Informally, in Weighted Interval Scheduling each interval has a certain value (weight), and we want to select set of intervals of a maximum total weight.

So, suppose we have set of intervals $1, 2, \dots n$, let’s denote this set as $I$.

Arbitrary interval $i \in I$ has a start and finish time as $s_i$ and $f_i$ respectively. Additionally each $i$ has a weight, denoted as $w_i$.

What we want to find is a subset $O$ ($O \subseteq I$) of non-overlapping (aka mutually compatible) intervals, with the maximum possible sum of intervals’ weights:

$$O = \underset{O \subseteq I; \forall i, j \in O; f_i \le s_j \lor f_j \le s_i}{\mathrm{argmax}} \sum_{i \in O} w_i $$

Don’t panic, that formula is pretty much self explanatory:

- Locate subset $O \subseteq I$

- Any two intervals in $O$, say $i$ and $j$, must be non-overlapping. That’s why we require that either $f_j \le s_i$ or $f_i \le s_j$ is true.

- Finally we are searching for the maximum sum of intervals weights $\sum_{i \in O} w_i$, hence the argmax.

Since we have a properly defined problem as well as precisely described expected outcome, it is a good time to start designing our algorithm.

Greedy algorithm

First let’s think of Weighted Interval Scheduling problem where each interval has an equal weight i.e. $w_1 = w_2 = \dots = w_n$.

Effectively what we will be looking for is to get subset $O$ having as much intervals in it as possible. Indeed, if intervals have equal length, then the more intervals we are able to select the higher is our total weight.

It is pretty clear that (unweighted) Interval Scheduling is nothing more but a special case of a more general Weighted Interval Scheduling.

If you look into our list of teaching periods you will probably notice that you can select four intervals, maximum:

- Summer School

- Trimester 1

- Trimester 2

- Either Trimester 3 or Semester 2

The greedy strategy that happens to produce optimal solution $O$ for unweighted intervals is pretty simple: accept the interval $i$ which has the minimum finishing time $f_i$.

That’s it! You just grab interval $i$ which has the earliest $f_i$, discard any intervals that overlap with $i$, and continue until there’s no more intervals to pick from. Try it with our primitive example, you will immediately grasp what is going on.

Let me further illustrate this with code:

1class Interval(object):

2 '''Date interval'''

3

4 def __init__(self, title, start, finish):

5 self.title = title

6 self.start = int(time.mktime(datetime.strptime(start, "%d %b, %y").timetuple()))

7 self.finish = int(time.mktime(datetime.strptime(finish, "%d %b, %y").timetuple()))

8

9 def __repr__(self):

10 return str((self.title, self.start, self.finish))

11

12def schedule_unweighted_intervals(I):

13 '''Use greedy algorithm to schedule unweighted intervals

14 sorting is O(n log n), selecting is O(n)

15 whole operation is dominated by O(n log n)

16 '''

17

18 I.sort(lambda x, y: x.finish - y.finish) # f_1 <= f_2 <= .. <= f_n

19

20 O = []

21 finish = 0

22 for i in I:

23 if finish <= i.start:

24 finish = i.finish

25 O.append(i)

26

27 return O

If you need more code to look at, here is complete implementation of Interval Scheduling in Python.

Now that we have wrapped up constant weight interval scheduling, what happens to our amazing greedy strategy if intervals have different weights? It will fail miserably.

Just consider situation when we need to decide which one to pick as the last interval: Trimester 3 or Semester 2? If we follow the greedy method for selection by the smaller finishing time $f_i$, then we will have to pick Trimester 3 (instead of more longer Semester 2).

So, while unweighted intervals are easy to schedule, we need to spend more time on weighted ones!

Recursive solution

Before we proceed I have to make one assumption: all intervals are sorted by finish times i.e. $f_1 \le f_2 \le \dots \le f_n$. That’s not too much to ask, as you can always sort your data in say $O(n\log n)$ time, therefore it will not add much to our final asymptotic complexity.

Now, consider two intervals $i$ and $j$. Let’s assume that $i < j$ is to mean $f_i \le s_j$ i.e. interval $i$ comes before the interval $j$. To make things more straightforward we will define a function $p(j)$: rightmost interval $i$, where $i < j$ and intervals $i$ and $j$ are mutually compatible.

Basically, $p(j)$ will help us talk about the first interval to the left of $j$ which is not-overlapping with it. I know that rightmost non-overlapping interval to the left of the $j$, might sound complex at first, but I am sure it will settle once you meditate on it a bit.

When locating that rightmost interval we can use binary search which has an $O(\log n)$ running time. Since we need to find $p(1), p(2), \dots ,p(n)$, overall complexity is bound by $O(n \log n)$.

When it comes to Python then bisect (yes internally it is implemented as a binary search) module provides a very succinct solution:

1def calculate_previous_intervals(I):

2 '''For every interval j, calculate the rightmost mutually compatible interval i,

3 where i < j I is a sorted list of Interval objects (sorted by finish time)

4 '''

5 # extract start and finish times

6 start = [i.start for i in I]

7 finish = [i.finish for i in I]

8

9 p = []

10 for j in xrange(len(I)):

11 i = bisect.bisect_right(finish, start[j]) - 1 # rightmost interval f_i <= s_j

12 p.append(i)

13

14 return p

I also posted on GitHub the complete implementation of Weighted Interval Selection in Python.

:fire: Attention! We come to the crucial point of our discussion, where we will finally get an insight into solving the Weighted Interval Scheduling problem.

Let’s think about optimal solution $O$. This solution is what we are after, but we do not know what it looks like. However, even without knowing much about the actual solution we can infer that last interval $n$ is either part of optimal solution or not:

$$n \in O \lor n \notin O$$

Indeed, it is perfectly safe to assume such a dichotomy. And since it is a dichotomy, we can look into both possibilities and see if we can make any further inferences.

Case 1: $n \in O$

If $n$ is a part of optimal solution $O$, then we can be sure that no interval between $p(n) \dots n$ can be part of the $O$ (because all intervals within that range will be overlapping with $n$ by the definition of $p(j)$). So, if $n \in O$, we can discard intervals $p(n) + 1, p(n) + 2, \dots n-1$.

Another important conclusion is that if $n \in O$, then optimal solution $O$ must also include an optimal solution to the problem set ${1\dots p(n)}$. Indeed, if $O$ is an optimal solution and $n\in O$, then $O$ can be divided into $n$ and optimal solution to a set of intervals between $1\dots p(n)$ (we denote such a smaller solution as $O_{p(n)}$).

Case 2: $n \notin O$

When $n \notin O$ then our optimal solution is equal to the optimal solution to a smaller problem consisting of intervals ${1\dots n-1}$. Indeed, if $n$ is not a part of optimal solution, then we look for such a solution within a smaller set consisting of intervals ${1\dots n-1}$.

Finding optimal solution in set ${1\dots j}$, $O_j$

After we reviewed both cases it is obvious that in order to find optimal solution $O$ (for $n$ elements) we have to look into smaller and smaller problems in the form of ${1\dots j}$.

Let’s denote such optimal solution over intervals ${1\dots j}$ as $O_j$, and actual value (maximum sum of weights) as $OPT(j)$. So, for any given $j$ we have the very same dichotomy: either $j \in O_j$ or $j \notin O_j$, therefore we can have the crucial piece of our design:

$$OPT(j) = max(w_j + OPT(p(j)), OPT(j-1))$$

That is value of optimal solution $O_j$ is either $w_j + OPT(p(n))$ or $OPT(n-1)$, whichever is bigger.

And from this follows another related inference: some arbitrary interval $j$ is a part of optimal solution $O_j$ iff:

$$w_j + OPT(p(j)) \ge OPT(j-1)$$

At this point we have everything necessary to solve the original problem. Indeed we have all we need to compute optimal solutions for any interval $1\dots n$ and by definition of our problem statement we are after the $O_n$ solution:

1def compute_opt(j):

2 if j == 0:

3 return 0

4

5 return max(w[j] + compute_opt(p[j]), compute_opt(j - 1))

Since we recursively computing optimal solutions to smaller and smaller subsets, we recalculate the very same values over and over again, which results in exponential execution time i.e. unacceptable. The obvious solution is memoization to avoid recalculations, which will result in amazing $O(n)$ runtime.

Now say we have calculated all our optimal solutions values: $OPT[1], OPT[2], \dots OPT(n)$. What if we need (and we generally do) to have actual intervals gathered? Easy:

1# given OPT and p, find actual solution intervals in O(n)

2O = []

3def compute_solution(j):

4 if j >= 0: # will halt on OPT[-1]

5 if I[j].weight + OPT[p[j]] > OPT[j - 1]:

6 O.append(I[j])

7 compute_solution(p[j])

8 else:

9 compute_solution(j - 1)

10compute_solution(len(I) - 1)

As you see, we have used the idea that interval $j$ is in optimal solution $O_j$ iff:

$$w_j + OPT(p(j)) \ge OPT(j-1)$$

At this point we solved our original problem by designing adequate algorithm (more details on how good is our algorithm are in Algorithm Analysis section).

Dynamic programming

The pith of our algorithm is obtaining the $OPT(1)\dots OPT(n)$ list. Once we got it, everything else was trivial to unfold.

So let’s scrutinize that $OPT$ list, and see if we need a recursive solution to calculate it.

Given the formula

$$OPT(j) = max(w_j + OPT(p(j)), OPT(j-1)$$

we know that any given value of $OPT$ depends on previous values: either $OPT(p(j))$ or $OPT(j-1))$

So what if we start from the first interval and traverse to the $n$th, can we compute $OPT(j)$ values iteratively? We can! For any given $j$ to have access to previous $OPT$ values, all we need to do is to persist already calculated items as we traverse the set $I$:

1# compute OPTs iteratively in O(n), here we use DP

2OPT = collections.defaultdict(int)

3OPT[-1] = 0

4OPT[0] = 0

5for j in xrange(1, len(I)):

6 OPT[j] = max(I[j].weight + OPT[p[j]], OPT[j - 1])

And since we are iteratively building up subproblems to finally arrive to the one we are originally expected to solve $OPT(n)$, we are having fun with dynamic programming (or DP for short).

In its essence DP involves dynamically building up sub-solutions, one after another, to finally solve the case in question.

I will write more about DP, not only because the technique is essential knowledge for any decent programmer, but also because solutions involving dynamic algorithms represent real elegance and beauty of the Computer Science.

Algorithm Analysis

It is time to briefly analyse our algorithms and their worst case complexities. Should you have any issues on Big-Oh analysis the best resource on the topic is Skiena’s “Algorithm Design Manual”.

Here is a list of steps we have to take and their respective time complexities:

1. Sort intervals set $I$, so that $f_1 \le f_2 \le \dots \le f_n$

If we use something like Quicksort or Mergesort and get away with $O(n \log n)$ (on average in case of Quicksort and as a worst case for the Mergesort).

2. Calculate $p(1), p(2), \dots ,p(n)$

We iterate over $n$ different items, and for each item we need to find the first non-overlapping interval to the left of it. Suppose we use binary search to locate that non-overlapping neighbor interval (which has $O(\log n)$ complexity).

Then, since we have two growing functions $f(n) = n$ and $g(n) = \log n$, the total time complexity will be their product:

$$O(f(n)) * O(g(n)) = O(f(n) * g(n)) = O(n \log n)$$

3. Calculate $OPT(1), OPT(2), \dots ,OPT(n)$

Both memoized and iterative versions of function yield $O(n)$.

4. Calculate solution (given $OPT$ list)

Since we already have $OPT$ values calculated, recursively traversing them is an $O(n)$ operation.

5. Overall performance

When adding Big-Oh complexities the dominant function defines the whole relationship. In our case it is

$$ O(n \log n)_{\text{sort intervals}} + O(n \log n)_{\text{calculate p[1..n]}} + O(n)_{\text{calculate OPT[1..n]}} + O(n)_{\text{find solution intervals}} = O(n \log n) $$

Not bad, I believe!

Summary

That’s it for today, so let me conclude this article by quick summary:

- we briefly visited special case of unweighted intervals

- algorithm based on greedy selection proved not good enough to solve more general case of Weighted Interval Scheduling

- we then developed a recursive function to calculate $OPT[1..n]$

- and finally experienced example of DP in iterative version of $OPT$ calculation

- when we have an array of $OPT$ values, it is trivial to produce a list of actual intervals that are elements of $O_n$.

Have a nice day!

NB: I created a repository with complete implementations of both weighted and unweighted problem variants. Feel free to fork and send me PR should you locate some issues with the code!

References

- Chawla, S. CS 787: Advanced Algorithms, Lecture Notes (Lecture 3 on Dynamic Programming)

- Skiena, S. (2008) The Algorithm Design Manual

- Kleinberg, J., Tardos, E. (2006) Algorithm Design